Artificial Intelligence

Machines that pretend to think

Audience: Newcomers to AI, decision-makers contemplating implementation of AI, senior leadership, AI enthusiasts

Contents

Executive Summary

Introduction

What Is Artificial Intelligence?

AI Hazards: Hype

AI is Limited as a Disruptive Technology

Two Broad Categories of AI

AI is Best Thought of as Automation

AI Hazards: Hallucinations, Context Windows Limitations

Conclusions

Footnotes and References

Executive Summary

Artificial intelligence is defined, types of AI characterized, and caveats regarding the technology are explained in this brief introductory article. We take note of the enormous hype surrounding the topic, and set some initial expectations for those new to AI. We also touch on some of its strengths and weaknesses, and where readers can learn more on the topic.

Introduction

In this brief article, we introduce newcomers to the field of artificial intelligence (AI), describe what the phrase means, flesh out the general big picture and explain how AI fits into the larger topic of automation. We note that, given the very large amount of money that has been poured into AI, there is, regrettably but understandably, an enormous amount of hype attached to the topic, and more than a few wild and unsubstantiated claims regarding its capabilities.

AI is useful in a limited fashion, and we take some time to consider its strengths and weaknesses. Having used various AIs now for more than a year as a regular component of developing our automation business, we speak to the utilities we have found, and the pitfalls and limitations of the technology.

Indeed, AI is overblown, and is far from the panacea that its boosters sometime claim. It does have positive capabilities and, when used critically and wisely, can consistently boost the output and productivity of its users.

We go into a little detail about the principal limitations of artificial intelligence technology, in an effort to set readers’ expectations realistically. In sum, we feel the technology is valuable, but limited. That being said, the availability of free AI chatbots allows users to explore the tech in some detail, and so have an excellent chance of staying current in this emerging field. We believe that knowledge and utilization of AI are important competitive necessities.

What Is Artificial Intelligence?

Per ChatGPT 3.5:

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to mimic human cognitive functions. These machines are designed to perform tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, learning, and language translation.

Key components of artificial intelligence include:

Machine Learning: AI systems often use machine learning algorithms to analyze and learn patterns from data. This enables the system to improve its performance over time without being explicitly programmed.

Natural Language Processing (NLP): NLP allows machines to understand, interpret, and generate human language. It is a crucial component for applications like chatbots, language translation, and sentiment analysis.

Computer Vision: This involves giving machines the ability to interpret and understand visual information from the world, such as images and videos. It is used in facial recognition, object detection, and image classification.

Expert Systems: These are AI systems that emulate the decision-making abilities of a human expert in a particular domain. They use rules and knowledge bases to provide solutions to specific problems.

Robotics: AI plays a significant role in robotics, enabling robots to perceive their environment, make decisions, and execute tasks autonomously.

AI can be categorized into two types:

Narrow or Weak AI: This type of AI is designed and trained for a particular task. It excels in performing specific functions but lacks the broad cognitive abilities of human intelligence.

General or Strong AI: This is a more advanced form of AI that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence. Achieving strong AI is a long-term goal in AI research.

AI has applications across various domains, including healthcare, finance, education, entertainment, and more. As technology advances, AI continues to evolve, potentially leading to innovative solutions and improvements in a variety of industries.

End ChatGPT 3.5.

AI Hazards: Hype

Begin Overlogix.

Readers interested in AI can profit from reading the timeline and history of AI, and apply critical thinking to claims of advances or break-throughs. The field is dominated by hype, implying capabilities that often disappear upon close examination. It’s a tool, albeit a complex one. So are programming languages and databases.

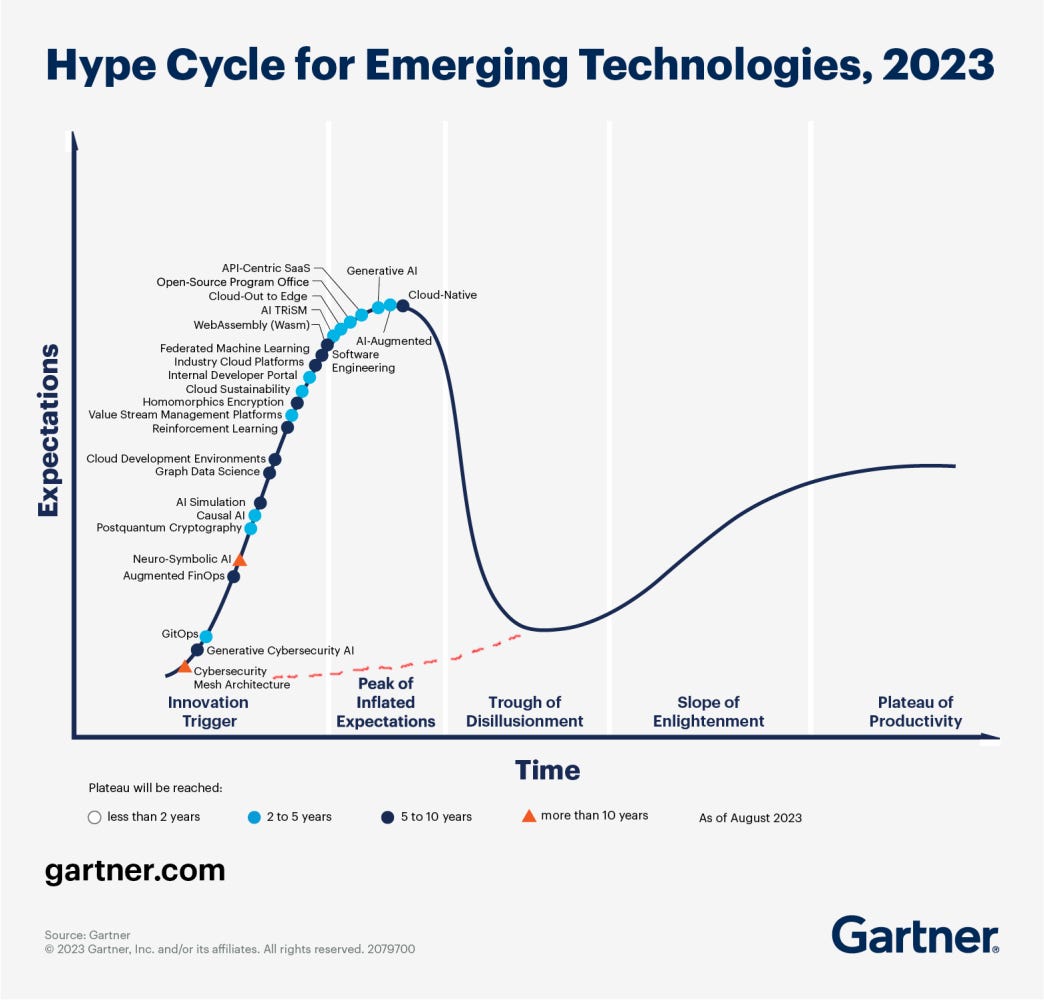

This image from research firm Gartner gives a conceptual view of AI hype as amplified by a list of events represented by the dots. We added the red dashed line above the label “Peak of Inflated Expectations” as a more likely trajectory for the technology. We expect the hype cycle to crash, as predicted by Gartner, and ignore it in favor of more gradual improvement. Development and testing of AI is difficult.

Gartner is refreshing, see a recent article on the subject. We think a thorough reading of Gartner’s writings on the subject worth the time spent, along with direct experience using AI tools such ChatGPT and Google Gemini. Doing so will immunize users of AI from unfounded reliance on the technology. No better antidote to hype is likely.

AI is Limited as a Disruptive Technology

Our interest in AI centers around augmentation of human efforts via AI, specifically for programming and automation. We view AI as yet another method of automation, and do not consider it much of a paradigm shift. We have found some positive results, particularly when it comes to prototyping code. We noticed we can overwhelm AIs with too many simultaneous requirements, and so keep requests very focused and narrow.

After well over a year of experimentation with various AI chatbots, ChatGPT, Google Gemini, Anthropic’s Claude (excellent but chat-limited), Microsoft’s Copilot (sadly lobotomized a few months ago, RIP), xAI’s Grok, and Perplexity, we have been able to consistently achieve around 5 - 10 % productivity gains, or a factor of between 1.05X and 1.10X. These modest gains are important, and do compound over time.

Breathless claims of productivity improvements beyond this are most likely hype, and we discount them. Most of the chatbots have context windows that are too small to handle commercially important business problems and manifestly lack reasoning skills. More importantly, as OpenAI has had to sheepishly admit with their Orion project, all current AGI candidates are large language models (LLMs) and have more or less plateaued as far as their capability progress is concerned.

Two Broad Categories of AI

We divide AI into two main categories: artificial general intelligence, or AGI, a.k.a. strong AI, as promoted by OpenAI with ChatGPT, Google with Gemini, and others, and narrow AI, a.k.a. weak AI, usually set up for very specific tasks. At the time of this writing, there really isn’t any widely accepted definition of AGI, apart from vague statements such as “out-performs humans on a wide variety of tasks”. Since this notion is clearly not measurable, there really isn’t any firm notion of what AGI is, and so AGIs, strictly speaking, do not yet exist.

When we want to be pedantic about the subject, or remind readers “there ain’t no sech animal”, we use the term AGI candidates instead of AGI. Colloquially, we often omit the candidate part and just say AGI, with the proviso that the term refers to an aspiration rather than a reality. We use AGI largely as research concierges and coding assistants. Narrow AI is already used in a variety of commercially systems, and is likely to find new applications in the near future.

Weak or narrow AI refers to AI that has been trained to perform well in specialized tasks. For example, an AI specifically trained in Python programming is likely to excel at such tasks, provided the tasks given to it are sufficiently narrow.

Artificial general intelligence (AGI) specifically refers to AI that can outperform humans on a wide range of tasks. As far as we know such a system does not currently exist.

Note that this class division is more fanciful than real. Candidates for the title of strong AI, such as ChatGPT and Google Gemini, exhibit uneven performance when asked questions beyond their pre-training.

Additionally, and crucially, AGI requires intense levels of computing power, often at high cost, limiting the accessibility of the technology. We read of leaders in the field proposing trillions of dollars to develop AI, nosebleed-level numbers exceeding the annual military budget of even the United States.

AI is Best Thought of as Automation

AI is a rapidly evolving field, a subset of automation centered on the concept of an algorithm or model trained by massive datasets for either general or specific tasks. We agree that AI is intended to mimic human thought, but doubt that any AI can currently think on its own. We haven’t found evidence yet of true cognitive powers from AI, and agree with the amusing epithet of AI as stochastic parrots.

All AI have limits to the number and complexity of tokens they can use at a time, in short, to guess the next word in a sequence. The accuracy of those predictions are a direct result of statistical inference derived from massive data used to train the AI. A recent comment by an AI expert claims that the performance of AI depends more on the data used to train it than the algorithm that runs it. In simple language, the narrower the training, the better the answers, within the bounds of the training.

AI Hazards: Hallucinations, Context Windows Limitations

Hallucinations Artificial intelligence systems, such as ChatGPT and Google Gemini, are known to hallucinate. We have experienced many AI hallucinations in recent interactions, leading to our dictum of constant quality checking of all results obtained from AI. These issues severely limit AI utility. We expect that will be the case with all AIs we try, a situation that will be gradually improved over time.

Despite massive, but finite training datasets, current AI systems are statistical word-guessing generators that mimic human responses but lack real understanding and creativity. Put simply, AI does well in areas of dense pre-training, but increasingly spouts nonsense as tasks put to them veer from their training.

By analogy with humans, we consider short-term memory, long-term memory and working memory. These notions have meaning in human terms and analogies, however inexact, in machine terms.

Long-term memory in an AI system: The pre-training of an AI, in other words, the data that forms a large fraction of its permanent programming, is a fundamental component of the machine’s long term memory. Some AI experts consider pre-training to almost completely characterize a given AI, with the algorithm that controls it taking second place.

Consider solving a math problem as an example. A math professor, sophisticated in math with considerable background knowledge, would naturally seek the most general answer for a given problem possible. A student in math, on the other hand, would more likely seek a quick, specific answer to complete the task. Both solve the problem, but the contexts are different. The math professor has a much greater working memory than the student, even if both have similar short-term memory.

The machine analogy to short-term memory and working memory is called the context window. The difference lies in the pre-training of the AI. An AI specifically trained in math might make larger, more general conclusions than one simply trained in calculation, much like our math professor and student.

We asked Google Gemini for its point of view.

Overlogix: What is a "context window" in AI?

Gemini: In AI, a context window refers to the amount of data a large language model (LLM) can consider at one time to understand and respond to a prompt or question. It's like the model's short-term memory, allowing it to analyze the recent flow of information for better comprehension.

Here's a breakdown of how context windows work:

Limited Memory: Unlike humans, LLMs don't have perfect memory.* They can only process a limited amount of information at once.

Focus on Recent Input: The context window defines this "limited amount." It determines how much of the conversation history or previous instructions the model considers when generating a response.

Improved Understanding: A larger context window allows the model to take into account more context, leading to a more relevant and coherent response.

Here's an analogy:

Imagine you're having a conversation. With a small memory (short context window), you might forget what was said a few sentences back, making your responses disconnected. A larger memory (long context window) allows you to reference earlier parts of the conversation for a smoother flow.

Benefits of Larger Context Windows:

More Coherent Responses: By considering a wider context, the model can generate responses that are more relevant to the overall conversation.

Improved Reasoning: A larger context window allows the model to follow complex reasoning chains and make connections between different parts of the conversation.

Better Task Completion: When the model remembers past instructions and context, it can perform tasks that require multiple steps more effectively.

Challenges of Larger Context Windows:

Computational Cost: Processing a larger amount of data requires more computing power, which can be expensive and resource-intensive.

Potential for Bias: If the training data contains biases, a larger context window might amplify those biases in the model's responses.

Diminishing Returns: There's a point where adding more context doesn't necessarily improve the response quality.

Current State of Context Windows:

Recent advancements in AI research have led to the development of LLMs with much larger context windows compared to earlier models.

The ideal context window size depends on the specific task and the capabilities of the LLM.

In essence, a context window is crucial for LLMs to understand the flow of information and generate meaningful responses.

* Ahem!. Human beings lack perfect memory, and can only process so much information at a time. Our capacity can be expanded via practice and training, however, like machines, we can only consider so much information at once.

Overlogix: What term in human psychology is closest to the AI "context window"?

Gemini: The term in human psychology closest to the AI concept of a "context window" is working memory.

Here's why working memory is a good fit:

Limited Capacity: Both working memory and context windows have a limited capacity. They can only hold a certain amount of information at a time.

Focus on Recent Information: Just like a context window prioritizes recent input, working memory focuses on the most recently processed information.

Crucial for Comprehension: Both working memory and context windows are essential for understanding information and generating appropriate responses. In humans, working memory allows us to follow a conversation, solve problems, and complete tasks that require keeping multiple pieces of information in mind at once.

However, it's important to note some key differences:

Biological vs. Computational: Working memory is a biological phenomenon arising from the interplay of different brain regions. Context windows are computational constructs designed by AI researchers.

Flexibility: Human working memory is more flexible than a context window. We can actively manipulate the information we hold in working memory, while a context window is typically fixed in size and focuses on the most recent input.

Overall, while not a perfect match, working memory is the closest concept in human psychology that captures the essence of an AI context window.

Conclusions

In conclusion, AI is an emerging technology in rapid development, which is used now, in a limited manner, as part of a general program of automation. We supplied food for thought above to distinguish between hype and results, and will publish our results here.

The rapid apparent development of artificial intelligence is mostly hype rather than reality. Gains to be had by consistent use of AI are modest at best and require considerable experience and discipline to exploit.

AI in its current form should be considered as just another form of automation, and one that is still in its infancy, despite claims otherwise. Very specific, narrowly focused AI does have current economic value and is used in such fields as medical diagnoses, manufacturing and forecasting.

AGI is largely a pipe dream, doesn’t currently exist, isn’t profitable and likely won’t be for some time to come. It is unfortunate that AGI hogs the AI spotlight, since that is likely to cause the AGI bubble to burst, spectacularly and soon, and may harm the much more useful and modest narrow AI field. In our opinion, narrow AI is the real hero of the AI world, and certainly is the only profitable component.

Currently available AIs suffer from a tendency to hallucinate, forcing astute and careful users to exercise great care fact-checking and validating results. Additionally, most AIs available today have very limited context windows or working memory, are stateless and cannot remember much context. These limitations severely limit the utility of current AI and so can only produce modest productivity amplification.

All that being said, we believe now is the right time for market participants to accustom themselves to AI. AI is entering the mainstream now, is still accessible for users so they can familiarize themselves with it for free, and is worthy of attention.

Footnotes and References

Thank you for reading this article!

More information about Overlogix can be found at Welcome to Overlogix!

We currently publish on both LinkedIn (general interest articles, summaries, TL;DR’s: easier and faster to read) and Substack (in-depth articles, how-to’s, technical studies and new approaches to business).

· Introduction: Welcome to Overlogix!

· The Overlogix Sunday Times Our newsletter, with occasional specials, published roughly every two weeks.

· Master Index All our articles can be found from here in two clicks.

· The Overlogix Table of Context All Overlogix articles in reverse chronological order

· Applied Artificial Intelligence: Index of Articles One of our specialties is Applied AI. This index lists all relevant articles on the topic, in reverse chronological order.

· Applied AI: Stories in the News Our semi-permanent, curated listing of interesting and important news from the world of artificial intelligence, from many different sources.

· Index: Getting a Job Up until recently, getting a job, much less a good job, has been a nightmare for most job seekers. We publish articles on how and why this is so, and what job hunters can do to find the perfect job for them. We also supply credible external resources, so people can consider their alternatives.

· Starting a B2B Business For everyone who can, we heartily recommend starting your own business. The tools are there, and there has never been a better time to do it.

· Building Our Own Robot We’re automating Overlogix from the start, and this series of articles tells exactly how we are doing it.

· Rebuilding the Linux Server: Index of Articles Running AI on your own machine (recommended) requires a modern, up-to-date operating system, and often a lot of additional software infrastructure. This series, dedicated to exactly that sort of system administration, details what we have done to build a powerful server that runs both databases and artificial intelligence, locally.

· The Gospel According to ChatGPT Conversations with various AIs and additional articles on the various challenges associated with actually making profitable use of artificial intelligence.

· TL;DR: Index of Fast Reads Brief, fast reads on various topics in artificial intelligence. If you are a beginner at AI, or a busy human needing fast and factual explanations of complicated technical topics, this is the place to start.

· TL;DR: Overlogix Artificial Intelligence Mini-Wiki Same Fast Reads as previous but arranged in a mini-wiki format some folks may like better.

· Welcome to the Overlogix Substack

· Overlogix: Table of Context Index to our Substack articles arranged by topics.

· Criteria for Paid Content Rules for what goes behind our paywall.

· Curated IT and AI Sources Annotated links to sites and YouTube channels we think are valuable.

.